Updated 22/12/22

Idea Engine

Idea Engine is an interactive mind map where you can bring your ideas to life. Load custom models, images and sounds to create interactive, non-linear stories, games or training scenarios.

An early prototype won the Mixed Reality Challenge: StereoKit.

In development, for updates see TicTok & Twitter

Current state

For mobile and PC VR

Hand tracking or controllers

Coming to Steam & Oculus

As I continued developing the prototype, I realised the potential Idea Engine has to allow people to create and share more complex interactive stories and experiences.

Existing functionality being tested

Import assets - models (and animations), images, audio - upload from your PC or via your phone's browser directly to mobile VR headsets.

Multi-state nodes - each node can have multiple states, each having a different colour, model, image, icon, decoration, position offset and more.

Hierarchical - create parent child relationships between nodes with optional text labels and line styles. Lock children to parents or allow them to move freely.

Multiple locations - add portals to allow users to navigate to different locations, each with their own background images, music and animated lighting.

High level scripting - create scripts to change the visibility and state of nodes, play sound effects, update variables and more. Create variables and update them using the drag and drop formula editor.

Events - bring the world to life with events. You can call scripts as a result of button presses, state changes, overlaps, grabbing and releasing nodes and looking at nodes.

Interactivity - grab and move objects to view them better. You can configure nodes to snap back into position, float where dropped or allow them to be added to the inbuilt inventory system where they can be taken to other locations.

In-game asset editing - decorate assets with coloured lines, add masks to images to remove backgrounds, record your own audio while in VR.

Text editing - long format text is encouraged with finger based editing (cut / paste etc.) within VR and the ability to compose your text in an external editor and then quickly paste it into the appropriate nodes using your browser.

Transitions - with a focus on story telling, you will be allowed to configure transition effects for when nodes change their state, or when the user navigates to a new location. This helps to maintain a mood.

Credits - you are encouraged to use Creative Commons licensed assets (CC0 / CC-BY) and key in the license details. A credits page is auto generated where users can browse all your assets with their appropriate acknowledgments.

AI Art / Text - you can use AI to generate whole stories and descriptions / images for nodes in your stories.

Locomotion - that works with hand tracking or controllers, including walking and flying modes. You can also teleport to configured locations.

Working on this week

General fixes and improvements - in preparation for alpha testing.

Coming soon

Project sharing - there will be multiple ways to share your project, including uploading to the cloud or packaging to a single file to share with friends.

Multiplayer - experience VR adventures with your friends. I plan to use Ready Player One avatars.

Metaverse - Idea Engine will have its own metaverse with deep linking support. You can optionally link your project to metaverse locations to make publicly available. More details coming soon.

Reviews - users will be able to leave ratings, reviews and feedback against your published stories.

NLP - AI will be used to prevent any hate speech being submitted in reviews. It will also objectively tag and categorising stories based on textual content and flag potentially offensive stories for manual review.

Advanced scripting - scripting will continually evolve. The aim is to keep it high level while providing enough tools to satisfy the needs of creators.

Highlights

I regularly post updates on Twitter and TikTok. Here are some highlights:

Infinite floor with in-game compass & physical direction indicators

Using AI to generate text and images

Hand-tracking based locomotion

Example of a single multi-state node

Using NLP to categorise stories and detect hate speech

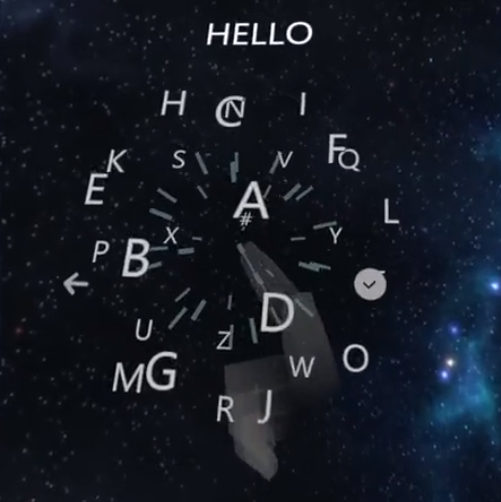

3d text input - will be used when browsing the metaverse

Fading UI at distance to remove artifacts